循环神经网络 (RNN) 是一种用于处理序列数据的人工神经网络,序列数据是相互依赖的(有限或无限)数据流,例如时间序列数据、信息字符串、对话等。

长短期记忆网络(LSTM)是一种特殊类型的循环神经网络,具有学习长期依赖的能力,是目前最常用的循环神经网络。

注意:有关循环神经网络的介绍,请参阅我们的教程深度学习 – 循环神经网络 (RNN)。

我们的例子是训练一个 LSTM 模型。在训练期间,模型会学习一段短文本。经过训练,模型可以在输入一个短句后预测下一个单词。

数据集

数据集是一个短文,《伊索寓言》中老鼠给猫挂铃铛的故事:

很久以前,老鼠有一种可以拿来对付敌人猫的东西。有人这么说,有人这么说,但最后一只小老鼠站了起来,说他有一个要做,他会遇到这个案子。你们都会同意的,他说,我们的首领在狡猾而敌人在我们当中。现在,如果我们可以从她那里得到一些,我们就可以从她那里得到。我,,,给那一只小铃铛,绕着猫的脖子。通过这种方式我们知道她什么时候在,并且可以在她在的时候。这一次见了面,直到一只老老鼠起身说,一切都很好,可是谁来给猫铃铛呢?老鼠们一齐说话。然后老老鼠说很容易。

这个短文本有 112 个不重复的符号,单词和标点符号都被认为是符号。

火车

如果我们输入 LSTM 3 个正确排序的符号和一个标签符号,模型最终将学会正确预测下一个符号(图 1).

图 1. 具有三个输入和一个输出的 LSTM 单元。

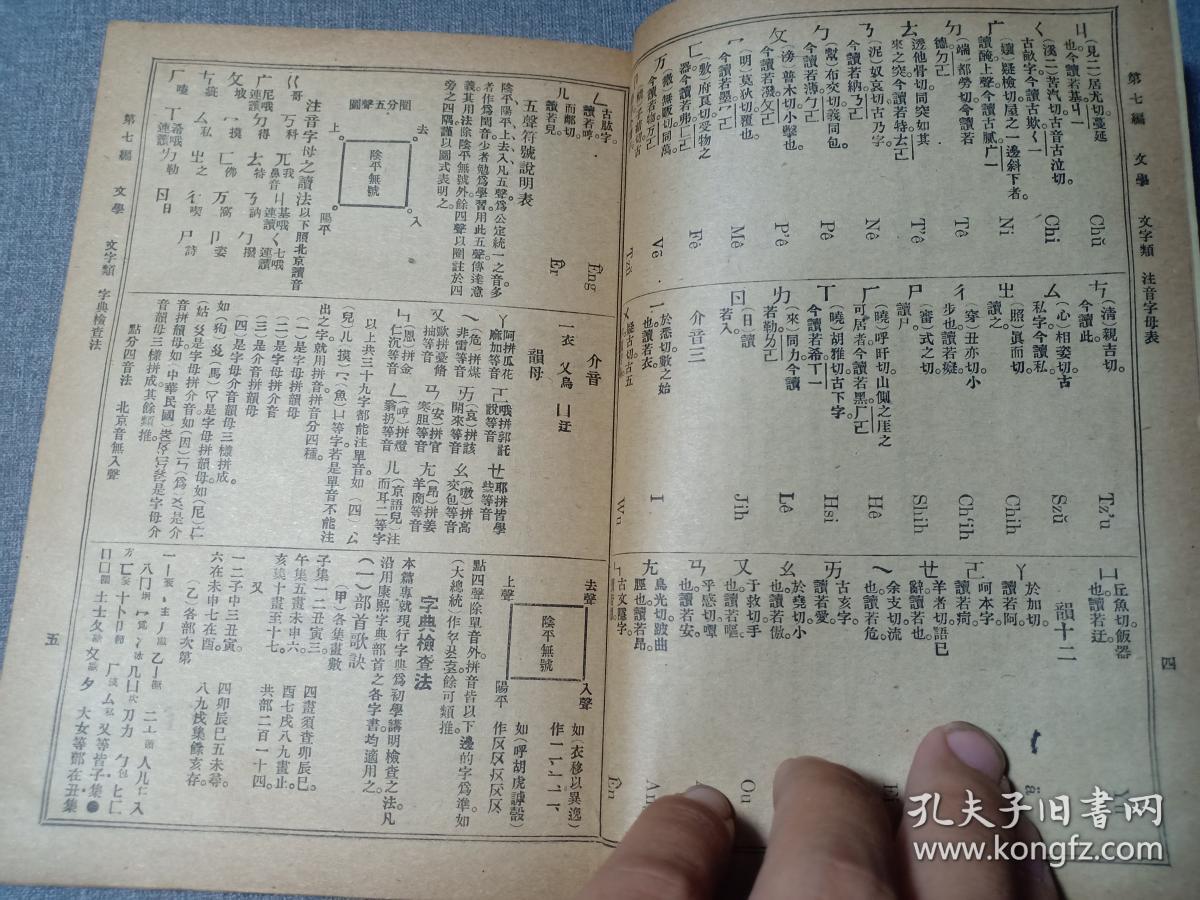

从技术上讲,LSTM 只理解数字。因此,需要对上述短文本进行一些处理,并将每个不重复的单词和标点符号替换为一个数字,总共112个不重复的符号。

我们将创建 2 个字典,一个可以从单词/标点符号映射到数字,另一个可以从数字映射到单词/标点符号(反向词典)。例如,上面的文本中有 112 个唯一符号,第一个字典包含以下条目 [ “,” : 0 ] [ “the” : 1 ], …, [ “” : 37 ],…,[ “spoke “:111]。还要构建一个逆字典,用于解码 LSTM 的输出。

LSTM输入输出都是数字,LSTM应该输出一个符号数字代码来表示符号。例如,如果输出为 37,则表示单词“”。

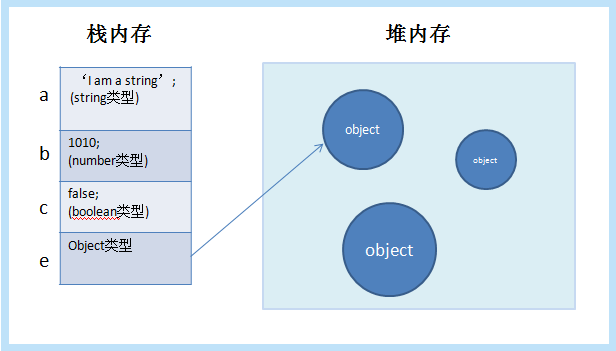

但是LSTM输出实际上是一个112元素的向量,每个元素值代表对应符号的概率,概率最高的符号就是最终的符号(图2).

图 2. 每个输入符号都替换为一个数字指示符。输出是一个向量,表示本次每个符号输出的概率。读取概率最高的符号代码,结合逆向字典,最终找到符号。

完成

下面是实现代码:

from __future__ import print_function import numpy as np import tensorflow as tf from tensorflow.contrib import rnn import random import collections import time start_time = time.time() def elapsed(sec): if sec<60: return str(sec) + " sec" elif sec<(60*60): return str(sec/60) + " min" else: return str(sec/(60*60)) + " hr" # 日志目录logs_path = './train/rnn_words' writer = tf.summary.FileWriter(logs_path) # 训练用的短文 training_file = 'belling_the_cat.txt' # 读取短文函数 def read_data(fname): with open(fname) as f: content = f.readlines() content = [x.strip() for x in content] content = [word for i in range(len(content)) for word in content[i].split()] content = np.array(content) return content training_data = read_data(training_file) print("Loaded training data...") # 短文符号->数字字典,数字->短文符号反向字典 def build_dataset(words): count = collections.Counter(words).most_common() dictionary = dict() for word, _ in count: dictionary[word] = len(dictionary) reverse_dictionary = dict(zip(dictionary.values(), dictionary.keys())) return dictionary, reverse_dictionary # 创建字典与反向字典 dictionary, reverse_dictionary = build_dataset(training_data) # 符号数量 vocab_size = len(dictionary) # 参数 learning_rate = 0.001 training_iters = 50000 display_step = 1000 n_input = 3 # RNN cell中的神经数量 n_hidden = 512

# tf Graph input x = tf.placeholder("float", [None, n_input, 1]) y = tf.placeholder("float", [None, vocab_size]) # RNN 输出节点的 weights 与 biases weights = { 'out': tf.Variable(tf.random_normal([n_hidden, vocab_size])) } biases = { 'out': tf.Variable(tf.random_normal([vocab_size])) } def RNN(x, weights, biases): # reshape 到 [-1, n_input] x = tf.reshape(x, [-1, n_input]) # Generate a n_input-element sequence of inputs # (eg. [had] [a] [general] -> [20] [6] [33]) x = tf.split(x,n_input,1) # 2-layer LSTM, each layer has n_hidden units. # Average Accuracy= 95.20% at 50k iter rnn_cell = rnn.MultiRNNCell([rnn.BasicLSTMCell(n_hidden),rnn.BasicLSTMCell(n_hidden)]) # 1-layer LSTM with n_hidden units but with lower accuracy. # Average Accuracy= 90.60% 50k iter # Uncomment line below to test but comment out the 2-layer rnn.MultiRNNCell above # rnn_cell = rnn.BasicLSTMCell(n_hidden) # generate prediction outputs, states = rnn.static_rnn(rnn_cell, x, dtype=tf.float32) # there are n_input outputs but # we only want the last output return tf.matmul(outputs[-1], weights['out']) + biases['out'] pred = RNN(x, weights, biases)

# 计算损失 cost = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(logits=pred, labels=y)) # 优化 optimizer = tf.train.RMSPropOptimizer(learning_rate=learning_rate).minimize(cost) # 模型评估 correct_pred = tf.equal(tf.argmax(pred,1), tf.argmax(y,1)) accuracy = tf.reduce_mean(tf.cast(correct_pred, tf.float32)) # 初始化变量 init = tf.global_variables_initializer() # 执行图 with tf.Session() as session: session.run(init) step = 0 offset = random.randint(0,n_input+1) end_offset = n_input + 1 acc_total = 0 loss_total = 0 writer.add_graph(session.graph) while step < training_iters: # Generate a minibatch. Add some randomness on selection process. if offset > (len(training_data)-end_offset): offset = random.randint(0, n_input+1) # 准备样本数据和标签 symbols_in_keys = [ [dictionary[ str(training_data[i])]] for i in range(offset, offset+n_input) ] symbols_in_keys = np.reshape(np.array(symbols_in_keys), [-1, n_input, 1]) symbols_out_onehot = np.zeros([vocab_size], dtype=float) symbols_out_onehot[dictionary[str(training_data[offset+n_input])]] = 1.0 symbols_out_onehot = np.reshape(symbols_out_onehot,[1,-1]) # 执行optimizer, accuracy, cost, pred _, acc, loss, onehot_pred = session.run([optimizer, accuracy, cost, pred], feed_dict={x: symbols_in_keys, y: symbols_out_onehot}) loss_total += loss acc_total += acc # 每过一定步数(display_step),打印信息 if (step+1) % display_step == 0: print("Iter= " + str(step+1) + ", Average Loss= " + "{:.6f}".format(loss_total/display_step) + ", Average Accuracy= " + "{:.2f}%".format(100*acc_total/display_step)) acc_total = 0 loss_total = 0 symbols_in = [training_data[i] for i in range(offset, offset + n_input)] symbols_out = training_data[offset + n_input] symbols_out_pred = reverse_dictionary[int(tf.argmax(onehot_pred, 1).eval())] print("%s - [%s] vs [%s]" % (symbols_in,symbols_out,symbols_out_pred)) # 递增step, offset step += 1 offset += (n_input+1) # 训练完成,打印输出 print("Optimization Finished!") print("Elapsed time: ", elapsed(time.time() - start_time)) print("Run on command line.") print("ttensorboard --logdir=%s" % (logs_path)) print("Point your web browser to: http://localhost:6006/") # 测试:接受用户输入,生成输出 while True: prompt = "%s words: " % n_input sentence = input(prompt) sentence = sentence.strip() words = sentence.split(' ') if len(words) != n_input: continue try: symbols_in_keys = [dictionary[str(words[i])] for i in range(len(words))] # 连续进行32次 for i in range(32): keys = np.reshape(np.array(symbols_in_keys), [-1, n_input, 1]) onehot_pred = session.run(pred, feed_dict={x: keys})

onehot_pred_index = int(tf.argmax(onehot_pred, 1).eval()) sentence = "%s %s" % (sentence,reverse_dictionary[onehot_pred_index]) symbols_in_keys = symbols_in_keys[1:] symbols_in_keys.append(onehot_pred_index) print(sentence) except: print("Word not in dictionary")

输出

Iter= 1000, Average Loss= 4.428141, Average Accuracy= 5.10% ['nobody', 'spoke', '.'] - [then] vs [then] Iter= 2000, Average Loss= 2.937925, Average Accuracy= 17.60% ['?', 'the', 'mice'] - [looked] vs [looked] Iter= 3000, Average Loss= 2.401870, Average Accuracy= 31.00% ['an', 'old', 'mouse'] - [got] vs [got] Iter= 4000, Average Loss= 2.079050, Average Accuracy= 46.10% ['when', 'she', 'was'] - [about] vs [about] Iter= 5000, Average Loss= 1.756826, Average Accuracy= 52.40% ['a', 'small', 'bell'] - [be] vs [be] Iter= 6000, Average Loss= 1.620517, Average Accuracy= 57.80% ['from', 'her', '.'] - [i] vs [cat] Iter= 7000, Average Loss= 1.410994, Average Accuracy= 61.50% ['some', 'signal', 'of'] - [her] vs [be] Iter= 8000, Average Loss= 1.340336, Average Accuracy= 65.60% ['enemy', 'approaches', 'us'] - [.] vs [,] ... Iter= 48000, Average Loss= 0.447481, Average Accuracy= 90.60% ['general', 'council', 'to'] - [consider] vs [consider] Iter= 49000, Average Loss= 0.527762, Average Accuracy= 89.30% ['ago', ',', 'the'] - [mice] vs [mice] Iter= 50000, Average Loss= 0.375872, Average Accuracy= 91.50% ['spoke', '.', 'then'] - [the] vs [the] Optimization Finished! Elapsed time: 31.482173871994018 min Run on command line. tensorboard --logdir=./train/rnn_words Point your web browser to: http://localhost:6006/ 3 words: the mice had the mice had a general council to said that is all a consider what measures they enemy , the cat . by this means we should always know when when when when when when when

![图片[2]-人工智能深度学习入门练习之(28)TensorFlow – 例子:循环神经网络(-唐朝资源网](https://images.43s.cn/wp-content/uploads//2022/06/1655198222795_3.jpg)

![图片[3]-人工智能深度学习入门练习之(28)TensorFlow – 例子:循环神经网络(-唐朝资源网](https://images.43s.cn/wp-content/uploads//2022/06/1655198222795_4.jpg)

![图片[4]-人工智能深度学习入门练习之(28)TensorFlow – 例子:循环神经网络(-唐朝资源网](https://images.43s.cn/wp-content/uploads//2022/06/1655198222795_6.jpg)

暂无评论内容