2022-02-11

参考: tf. 和 tf..

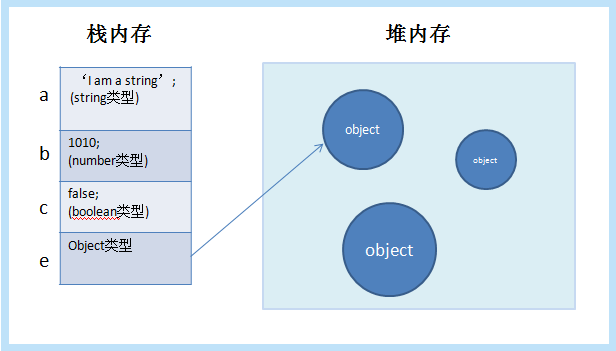

对于卷积层,批量归一化发生在卷积计算之后和应用激活函数之前。训练阶段:如果卷积计算输出多个通道,我们需要对这些通道的输出进行批量归一化,每个通道都有独立的拉伸和偏移参数,都是标量。假设 mini-batch 中有 m 个样本。在单个通道上,假设卷积计算输出的高度和宽度分别为 p 和 q。我们需要同时对通道中的 m×p×q 个元素进行批量归一化。在对这些元素进行归一化时,我们使用相同的均值和方差,即通道中 m×p×q 个元素的均值和方差。测试阶段:用训练阶段直接获得的整个训练集的均值和方差进行归一化。

我们用一个mxnet版本的代码来了解一下具体的实现:

def batch_norm(X, gamma, beta, moving_mean, moving_var, eps, momentum): # 通过autograd来判断当前模式是训练模式还是预测模式 if not autograd.is_training(): # 如果是在预测模式下,直接使用传入的移动平均所得的均值和方差 X_hat = (X - moving_mean) / nd.sqrt(moving_var + eps) else: assert len(X.shape) in (2, 4) if len(X.shape) == 2: # 使用全连接层的情况,计算特征维上的均值和方差 mean = X.mean(axis=0) var = ((X - mean) ** 2).mean(axis=0) else: # 使用二维卷积层的情况,计算通道维上(axis=1)的均值和方差。这里我们需要保持 # X的形状以便后面可以做广播运算 mean = X.mean(axis=(0, 2, 3), keepdims=True) var = ((X - mean) ** 2).mean(axis=(0, 2, 3), keepdims=True) # 训练模式下用当前的均值和方差做标准化 X_hat = (X - mean) / nd.sqrt(var + eps) # 更新移动平均的均值和方差 moving_mean = momentum * moving_mean + (1.0 - momentum) * mean moving_var = momentum * moving_var + (1.0 - momentum) * var Y = gamma * X_hat + beta # 拉伸和偏移 return Y, moving_mean, moving_var class BatchNorm(nn.Block): def __init__(self, num_features, num_dims, **kwargs): super(BatchNorm, self).__init__(**kwargs) if num_dims == 2: shape = (1, num_features) else: shape = (1, num_features, 1, 1) # 参与求梯度和迭代的拉伸和偏移参数,分别初始化成1和0 self.gamma = self.params.get('gamma', shape=shape, init=init.One()) self.beta = self.params.get('beta', shape=shape, init=init.Zero()) # 不参与求梯度和迭代的变量,全在内存上初始化成0 self.moving_mean = nd.zeros(shape) self.moving_var = nd.zeros(shape) def forward(self, X): # 如果X不在内存上,将moving_mean和moving_var复制到X所在显存上 if self.moving_mean.context != X.context: self.moving_mean = self.moving_mean.copyto(X.context) self.moving_var = self.moving_var.copyto(X.context) # 保存更新过的moving_mean和moving_var Y, self.moving_mean, self.moving_var = batch_norm( X, self.gamma.data(), self.beta.data(), self.moving_mean, self.moving_var, eps=1e-5, momentum=0.9) return Y

查看代码

2. 实现

目前有三种实现方式。

2.1 tf.nn。

该函数是一种低级实现方法。使用时,mean、scale等参数需要自己传递和更新。因此,实际使用中需要自己封装函数。一般不建议使用,但需要了解原理。非常有帮助。

import tensorflow as tf def batch_norm(x, name_scope, training, epsilon=1e-3, decay=0.99): """ Assume nd [batch, N1, N2, ..., Nm, Channel] tensor""" with tf.variable_scope(name_scope): size = x.get_shape().as_list()[-1] scale = tf.get_variable('scale', [size], initializer=tf.constant_initializer(0.1)) offset = tf.get_variable('offset', [size]) pop_mean = tf.get_variable('pop_mean', [size], initializer=tf.zeros_initializer(), trainable=False) pop_var = tf.get_variable('pop_var', [size], initializer=tf.ones_initializer(), trainable=False) batch_mean, batch_var = tf.nn.moments(x, list(range(len(x.get_shape())-1))) train_mean_op = tf.assign(pop_mean, pop_mean * decay + batch_mean * (1 - decay)) train_var_op = tf.assign(pop_var, pop_var * decay + batch_var * (1 - decay)) def batch_statistics(): with tf.control_dependencies([train_mean_op, train_var_op]): return tf.nn.batch_normalization(x, batch_mean, batch_var, offset, scale, epsilon) def population_statistics(): return tf.nn.batch_normalization(x, pop_mean, pop_var, offset, scale, epsilon) return tf.cond(training, batch_statistics, population_statistics) is_traing = tf.placeholder(dtype=tf.bool) input = tf.ones([1, 2, 2, 3]) output = batch_norm(input, name_scope='batch_norm_nn', training=is_traing)

查看代码

© 版权声明

本站下载的源码均来自公开网络收集转发二次开发而来,

若侵犯了您的合法权益,请来信通知我们1413333033@qq.com,

我们会及时删除,给您带来的不便,我们深表歉意。

下载用户仅供学习交流,若使用商业用途,请购买正版授权,否则产生的一切后果将由下载用户自行承担,访问及下载者下载默认同意本站声明的免责申明,请合理使用切勿商用。

THE END

暂无评论内容