2022-02-24

深度学习 IBM Class 5 –

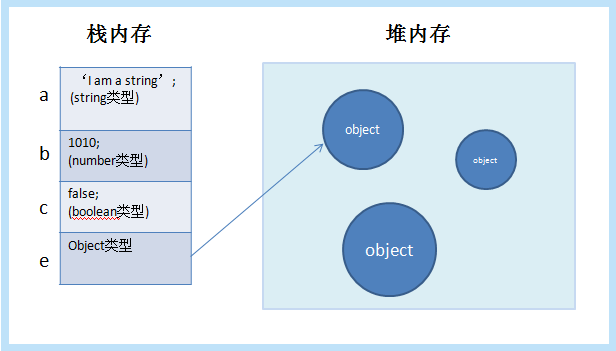

使用 DBN 进行手写识别的传统多层感知器或神经网络的一个问题:反向传播可能总是导致局部最小值。当误差面(error)包含多个凹槽时,当你做梯度下降时,你并没有找到最深的凹槽。下面你将看到 DBN 如何解决这个问题。

深度信念网络可以通过额外的预训练程序来解决局部最小值。预训练是在反向传播之前进行的,这样误差率就不会离最优解那么远,也就是我们已经接近最优解了。然后通过反向传播慢慢降低错误率。深度信念网络主要分为两部分。第一部分是多层玻尔兹曼感知器,用于预训练我们的网络。第二部分是前馈反向传播网络,它可以使 RBM- 网络更加精细。

1. 加载必要的深度信念网络库

# urllib is used to download the utils file from deeplearning.net

import urllib.request

response = urllib.request.urlopen('http://deeplearning.net/tutorial/code/utils.py')

content = response.read().decode('utf-8')

target = open('utils.py', 'w')

target.write(content)

target.close()

# Import the math function for calculations

import math

# Tensorflow library. Used to implement machine learning models

import tensorflow as tf

# Numpy contains helpful functions for efficient mathematical calculations

import numpy as np

# Image library for image manipulation

from PIL import Image

# import Image

# Utils file

from utils import tile_raster_images

2. 构建 RBM 层

RBM详情请参考【】

为了在 中应用 DBN,在下面创建一个 RBM 类

class RBM(object):

def __init__(self, input_size, output_size):

# Defining the hyperparameters

self._input_size = input_size # Size of input

self._output_size = output_size # Size of output

self.epochs = 5 # Amount of training iterations

self.learning_rate = 1.0 # The step used in gradient descent

self.batchsize = 100 # The size of how much data will be used for training per sub iteration

# Initializing weights and biases as matrices full of zeroes

self.w = np.zeros([input_size, output_size], np.float32) # Creates and initializes the weights with 0

self.hb = np.zeros([output_size], np.float32) # Creates and initializes the hidden biases with 0

self.vb = np.zeros([input_size], np.float32) # Creates and initializes the visible biases with 0

# Fits the result from the weighted visible layer plus the bias into a sigmoid curve

def prob_h_given_v(self, visible, w, hb):

# Sigmoid

return tf.nn.sigmoid(tf.matmul(visible, w) + hb)

# Fits the result from the weighted hidden layer plus the bias into a sigmoid curve

def prob_v_given_h(self, hidden, w, vb):

return tf.nn.sigmoid(tf.matmul(hidden, tf.transpose(w)) + vb)

# Generate the sample probability

def sample_prob(self, probs):

return tf.nn.relu(tf.sign(probs - tf.random_uniform(tf.shape(probs))))

# Training method for the model

def train(self, X):

# Create the placeholders for our parameters

_w = tf.placeholder("float", [self._input_size, self._output_size])

_hb = tf.placeholder("float", [self._output_size])

_vb = tf.placeholder("float", [self._input_size])

prv_w = np.zeros([self._input_size, self._output_size],

np.float32) # Creates and initializes the weights with 0

prv_hb = np.zeros([self._output_size], np.float32) # Creates and initializes the hidden biases with 0

prv_vb = np.zeros([self._input_size], np.float32) # Creates and initializes the visible biases with 0

cur_w = np.zeros([self._input_size, self._output_size], np.float32)

cur_hb = np.zeros([self._output_size], np.float32)

cur_vb = np.zeros([self._input_size], np.float32

分类:

技术要点:

相关文章:

© 版权声明

本站下载的源码均来自公开网络收集转发二次开发而来,

若侵犯了您的合法权益,请来信通知我们1413333033@qq.com,

我们会及时删除,给您带来的不便,我们深表歉意。

下载用户仅供学习交流,若使用商业用途,请购买正版授权,否则产生的一切后果将由下载用户自行承担,访问及下载者下载默认同意本站声明的免责申明,请合理使用切勿商用。

THE END

暂无评论内容