简单线性回归

import numpy as np from sklearn.linear_model import LinearRegression x = np.array([143, 145, 147, 149, 150, 153, 154, 155, 156, 157, 158, 159, 160, 162, 164]).reshape(-1,1) y = np.array([88, 85, 88, 91, 92, 93, 95, 96, 98, 97, 96, 98, 99, 100, 102]) model = LinearRegression().fit(x, y) print('截距:', model.intercept_) print('斜率:', model.coef_)

一元多项式回归

y=w0+ w1x + w2x2+ w3x3+ … + wdxd

np.polyfit(x,y,num) 多项式拟合,num为拟合最高次次数

np.polyval(p,x)计算多项式的函数值。返回在x处多项式的值,p为多项式系数

import matplotlib.pyplot as plt

import numpy as np

x = np.arange(1/30,15/30,1/30)

y = np.array([11.86, 15.67, 20.60, 26.69, 33.71, 41.93, 51.13, 61.49, 72.9, 85.44, 99.08, 113.77, 129.54, 146.48])

print("一元二次多项式方程为:n",np.poly1d(np.polyfit(x,y,2)))

plt.figure()

plt.rcParams["font.sans-serif"] = ['SimHei']

plt.title('回归拟合一元二次方程', fontproperties="SimHei", fontsize=20)

plt.xlabel('t',fontsize=14)

plt.ylabel('s',fontsize=14)

plt.tick_params(labelsize=10)

plt.grid(linestyle=':')

plt.scatter(x, y, c = '#7f7f7f', label='(s,t)', marker='^')

fit_y=np.polyval(np.polyfit(x,y,deg = 2),x)

plt.plot(x,fit_y,label='目标曲线')

plt.legend()

plt.show()

输出结果:

一元二次多项式方程为:

2

489.3 x + 65.89 x + 9.133

(R方检验转载至此博主)

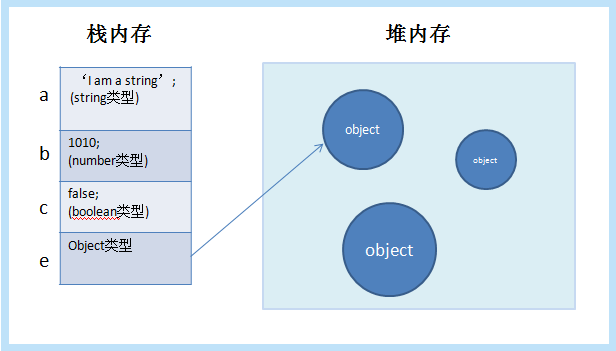

R平方

得到预估模型后,我们可以用R平方判断模型的拟合程度强不强,R平方的范围是0到1,其中0表示不相关,1表示相关。

R_square = linear_model.LinearRegression().score(scaledx,y) # 用于求R^2 相关关系 (具体实现,见下一段代码)

from sklearn.metrics import r2_score

getmodel=np.poly1d(np.polyfit(x,y,2))

R_square=r2_score(y,getmodel(x))

# 0表示不相关,1表示相关。

print('R_square: {:.2f}'.format(R_square))

输出结果:R_square: 1.00

多元二次多项式

多元二次回归可转为多元线性回归

回归.csv

import pandas as pd

from sklearn.preprocessing import StandardScaler

from sklearn import linear_model

from sklearn.metrics import r2_score

f=open('../file/回归.csv')

df=pd.read_csv(f)

x=df[["TV","radio","newspaper"]]

y=df['sales']

#对数据进行标准化处理

scaler=StandardScaler()

scaledx=scaler.fit_transform(x)

#线性回归模型

method=linear_model.LinearRegression()

model_1=method.fit(scaledx,y)

coef_,intercept_=model_1.coef_,model_1.intercept_

print('回归模型的系数为: {},截距为: {}'.format(coef_,intercept_))

#用R平方检验该模型的拟合度

predict_y=model_1.predict(scaledx)

R_square=r2_score(y,predict_y)

print('R_square is: ',R_square)

# print("拟合评价R^2",model_1.score(scaledx,y)) # 相关关系R^2

# 模型可行,就可以预估

pre_y=model_1.predict(scaledx)

print('预估的sales: ',pre_y)

输出结果:

回归模型的系数为: [ 4.43106384 2.86518497 -0.76465975],截距为: 12.233333333333334

R_square is: 0.8877726430363375

预估的sales: [20.05376896 10.84515786 9.7737715 16.66202644 13.01723166 9.65694334

11.07624917 12.58688772 3.76535383 13.74020227 6.87302861 18.74937863]

import numpy as np

from sklearn import linear_model

x = np.array([[1000,5,1000000,25],

[600,7,3600,49],

[1200,6,1440000,36],

[500,6,2500,36],

[300,8,90000,64],

[400,7,160000,49],

[1300,5,1690000,25],

[1100,4,1210000,16],

[1300,3,1690000,9],

[300,9,90000,81]])

y = np.array([100,75,80,70,50,65,90,100,110,60])

regr = linear_model.LinearRegression()#关键代码

![图片[1]-R平方判断模型的程度强不强,0到1-唐朝资源网](https://images.43s.cn/wp-content/uploads//2022/07/1657467783297_8.png)

regr.fit(x,y) #进行拟合

print("拟合评价R^2: ",regr.score(x,y))#相关关系

print("关系系数",regr.coef_) # y = k1*x1+k2*x2系数

输出结果:

拟合评价R^2 0.9334881295648378

关系系数 [ 5.57116345e-02 -2.11716380e+01 -2.07999201e-05 1.29129841e+00]

非线性回归

popt , pcov = curve_fit(exp_func, x_arr, y_arr) # 核心语句popt记录系数矩阵

(拟合优度R^2计算出自此博主)

import numpy as np from scipy.optimize import curve_fit import matplotlib.pyplot as plt # 忽略除以0的报错 np.seterr(divide='ignore', invalid='ignore') # 拟合数据集 x_arr = np.arange(2, 17, 1) y_arr = np.array([6.48, 8.2, 9.58, 9.5, 9.7, 10, 9.93, 9.99, 10.49, 10.59, 10.60, 10.8, 10.6, 10.9, 10.76]) def exp_func(x, a, b): return a * np.exp(b / x) # #################################拟合优度R^2的计算###################################### def __sst(y_no_fitting): """ 计算SST(total sum of squares) 总平方和 :param y_no_predicted: List[int] or array[int] 待拟合的y :return: 总平方和SST """ y_mean = sum(y_no_fitting) / len(y_no_fitting) s_list =[(y - y_mean)**2 for y in y_no_fitting]sst = sum(s_list) return sst def __s-s-r(y_fitting, y_no_fitting): """ 计算s-s-r(regression sum of squares) 回归平方和 :param y_fitting: List[int] or array[int] 拟合好的y值 :param y_no_fitting: List[int] or array[int] 待拟合y值 :return: 回归平方和s-s-r """ y_mean = sum(y_no_fitting) / len(y_no_fitting) s_list =[(y - y_mean)**2 for y in y_fitting] s-s-r = sum(s_list) return s-s-r def __sse(y_fitting, y_no_fitting): """ 计算SSE(error sum of squares) 残差平方和 :param y_fitting: List[int] or array[int] 拟合好的y值 :param y_no_fitting: List[int] or array[int] 待拟合y值 :return: 残差平方和SSE """ s_list = [(y_fitting[i] - y_no_fitting[i])**2 for i in range(len(y_fitting))] sse = sum(s_list) return sse def goodness_of_fit(y_fitting, y_no_fitting): """ 计算拟合优度R^2 :param y_fitting: List[int] or array[int] 拟合好的y值 :param y_no_fitting: List[int] or array[int] 待拟合y值 :return: 拟合优度R^2

""" s-s-r = __s-s-r(y_fitting, y_no_fitting) SST = __sst(y_no_fitting) rr = s-s-r /SST return rr popt , pcov = curve_fit(exp_func, x_arr, y_arr) a_exp = popt[0] b_exp = popt[1] print("系数为a = %.5f,b = %.5f"%(a_exp,b_exp)) pre_y = exp_func(x_arr, a_exp, b_exp) print("e^x曲线预测值为: ", pre_y) rr_exp = goodness_of_fit(pre_y, y_arr) # R^2检验 print("e^x曲线拟合优度为%.5f" % rr_exp) figure5 = plt.figure(figsize=(8,6)) plt.plot(x_arr, pre_y, color="#4682B4", label='e^x曲线拟合曲线') plt.scatter(x_arr, y_arr, color='#FFB6C1', marker="*", label='原始数据') plt.xlabel('x') plt.ylabel('y') plt.legend(loc=4) # 指定legend的位置右下角 plt.title('curve_fit 指数函数拟合') plt.rcParams['font.sans-serif'] = ['SimHei'] # 用来正常显示中文标签 plt.rcParams['axes.unicode_minus'] = False # 用来正常显示负号 plt.show()

输出结果:

系数为a = 11.58931,b = -1.05292

e^x曲线预测值为: [ 6.84572463 8.15890905 8.907143 9.38863001 9.7239957 9.97085227

10.16009864 10.30977008 10.43109317 10.53141869 10.61575999 10.68765306

10.74966309 10.80369606 10.85119766]

e^x曲线拟合优度为0.94156

![图片[2]-R平方判断模型的程度强不强,0到1-唐朝资源网](https://images.43s.cn/wp-content/uploads//2022/07/1657467783297_11.png)

![图片[3]-R平方判断模型的程度强不强,0到1-唐朝资源网](https://images.43s.cn/wp-content/uploads//2022/07/1657467783297_12.png)

![[原] KVM 环境下MySQL性能对比-唐朝资源网](https://images.43s.cn/wp-content/uploads/2022/06/031f29d0ba23.webp)

暂无评论内容