This article is only used to test whether the api file is working properly. If you see this article on your website, it shows that the api file is working, please delete this article and start collecting!

Tags:tag1 tag2 tag3

Link test:baidu

Youcai cloud collector is a website collector that automatically collects relevant articles and publishes them to user websites according to keywords provided by users. It can automatically identify the title, text and other information on various web pages, and can realize the whole network collection without any collection rules written by users. After collecting the content, it will automatically calculate the correlation between the content and the set keywords, and only push the relevant articles to the user. It supports a series of SEO functions, such as intelligent title, title prefix, keyword auto bold, fixed link insertion, tag tag tag extraction, automatic internal chain, automatic map matching, automatic pseudo original, content filtering and replacement, phone number and website cleaning, timing collection, baidu active submission, etc. Users only need to set up keywords and related requirements to achieve full hosting, zero maintenance of website content update. Unlimited number of sites, whether it is a single site or a large number of site groups, can be very convenient for management.

R & D background

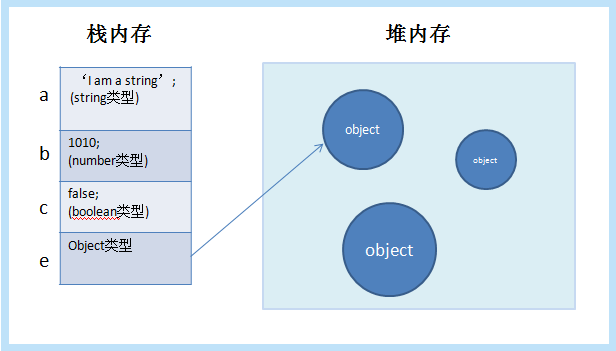

The article collector is a necessary tool for most webmasters to update the website. When extracting the web information, the traditional collector mainly matches the content of the web page based on the regular expression, which runs fast and is difficult to realize. However, different websites have different page structures and need to write different collection rules, which is heavy workload and difficult to maintain. In addition, users need to find their own collection source, also need to hang up to run the collector, and even involve a series of problems such as blocked IP, need to use proxy IP and so on.

Major function

Under the above background, YouCaiyun was launched in 2016, and its functions are as follows:

It provides a large keyword database of the order of 100 million + and can search keywords according to any text entered by users. After simple check, it can be used for collection, greatly reducing the time and energy of users to collect keywords.

Users can also create their own private thesaurus, which can be grouped into different databases. Millions of keywords can be easily managed to meet the needs of more personalized keywords.

Collect articles by keywords, based on Baidu, Sogou, haosou and other search engines, the whole network collects exquisite articles, users do not have to spend energy to find the source of collection.

Automatic identification of web page code, title, text and other information, do not need to set up different collection rules for each website, do not need to find people to write collection rules everywhere, do not need to know HTML source code, zero maintenance.

You can set the length of the required text, such as 500 words, 750 words, 1000 words, and automatically discard the content whose length is not up to the standard.

A variety of natural language processing algorithms are built in the system to automatically calculate the correlation between the text and keywords (cosine distance between feature vectors), automatically filter out the articles with low correlation, and only leave the articles with high correlation to users.

Automatically calculate the smoothness of the text (Language confusion), discard the articles with low fluency, and leave the articles with high smoothness to users.

Automatically calculate the correlation between title and description and keywords. If the correlation is low, keywords can be automatically inserted into the title and description to improve the relevance. You can also set a prefix keyword for the title, and randomly select one of the prefixes to add to the title of the article.

Text identification based on machine learning algorithm can audit the collected content and ensure the security of user content.

To achieve the pseudo original function based on synonym replacement, we can select the most suitable words from the 20 million pairs of synonym database to replace the words in the original text, so as to ensure the readability of the article.

To achieve the intelligent AI pseudo original based on machine learning, the original text is encoded into high-dimensional semantic vector, and then decoded word by word by decoder to realize the complete rewriting of the whole article. The pseudo original degree is high and the readability is good.

Automatic extraction of tags tags, and on this basis to achieve automatic internal chain, when the text corresponding to the tag appears in the text, add a link to the text to point to an article with the same topic, so as to realize the automatic scientific and effective inner chain construction.

You can also set fixed links. When some fixed text appears in the text, add fixed links to it, pointing to articles inside or outside the station.

According to the content of the article automatic matching, so that you can even collect articles can be illustrated.

You can set image localization or use remote images, and block all pictures.

You can set to block the collection of some websites or the content containing some specific words.

Automatically filter the redundant information such as contact information, website address and advertising content before and after the article, and clear all labels. Only the paragraph label and picture label are reserved in the body part, without any random code and no typesetting format. It is convenient for users to customize the appearance through CSS style.

Strict anti duplication mechanism, the entire platform each website only collects once, does not duplicate collection. Under the same website, articles with the same title are collected only once, not repeatedly.

You can specify the number of articles allowed to be collected for each keyword, so as to achieve a large number of long tail keywords without repeated layout.

The cloud automatically runs the collection task, which can be collected in fixed time and quantity. Users do not need to install any software on their own computers, do not need to hang up for collection, or even need to open a browser.

After collection, it will be automatically released to the user’s website background. Users only need to download the interface file and upload it to the root directory of the website to complete the docking.

After collection, it will automatically execute Baidu active push, so that spiders can quickly find your articles.

暂无评论内容